This is an automated archive made by the Lemmit Bot.

The original was posted on /r/stablediffusion by /u/Naetharu on 2024-08-20 08:06:34+00:00.

I thought I would share this as I’ve not seen it mentioned elsewhere. I’m working with Flux at the moment, but I wanted to save some of my VRAM for other uses. So I made a manual edit to the Comfy UI code to cap my VRAM below the actual card limit.

The results are interesting.

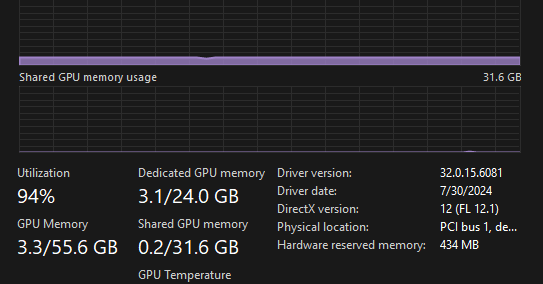

I have an RTX4090 with 24GB of VRAM and 64GB of system RAM.

Without the changes I can create a 800 x 1200 image in 20 steps in ~17 seconds. As I reduce the VRAM the speed of generations increases as you might expect. But nowhere near as much as I had anticipated.

At 12GB of VRAM allocation I get 1.8s/it for a generation of around 35 seconds.

And most interesting I can take this all the way down to just 3GB of VRAM, and still get 2.3s/it for a generation of around 44 seconds in total. This also reduces the load on the GPU way down to around 50% for the 4090.

This allows me to run other GPU based software (a local LLM in my case) at the same time. It also allows me to run flux image generation in the background while playing some games.

I’m going to put the information together and make a proposal for adding this as an official option in the UI later. But for now I thought it might be helpful to some others here.

Another note is that hard-coding the limit this way seems to result in better performance and less OOM errors than allowing the official calculation to do the work. So if you have a lower end GPU it might be worth trying to set the memory this way (you can google bits in a GB to find your optimal numbers) and testing to see if you get more consistent and faster generations.

The code you need to change is:

1: Line 520 in model_management.py (this sets the lowvram limit for the first load)

2: Line 680 in model_patcher.py (this sets the lowvram limit for subsiquent runs)

You can create git branches for the different VRAM configurations so you can easily swap between them before loading Comfy.

EDIT: I just thought I would add that I am using the Nvidia Studio Ready Drivers - I have no idea if this makes any difference, but worth mentioning as I assume many folk may be using the Game Ready drivers. If you do want to install the SRD you can do so via GForce Experience, by going to drivers, and then into the pips option. In theory the SRD is better optimized for studio work such as AI generations and video editing. I’ve not yet tested this with the game drivers.