This is an automated archive made by the Lemmit Bot.

The original was posted on /r/apple by /u/kloolegend on 2024-10-30 22:26:58+00:00.

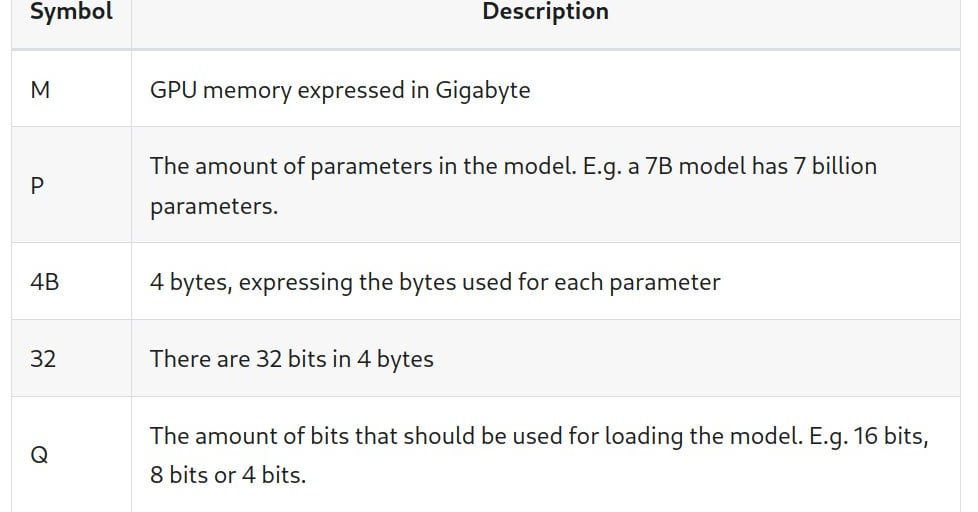

Announcement says it can run up to 200b parameters. Meanwhile my M1 Max 64GB (maxed out chip) is struggling with 70B Llama models. How did apple benchmark this? Are they lowering the quantization to run larger models?

Good source to check out:

You must log in or register to comment.